Order Matters: Learning Element Ordering for Graphic Design Generation

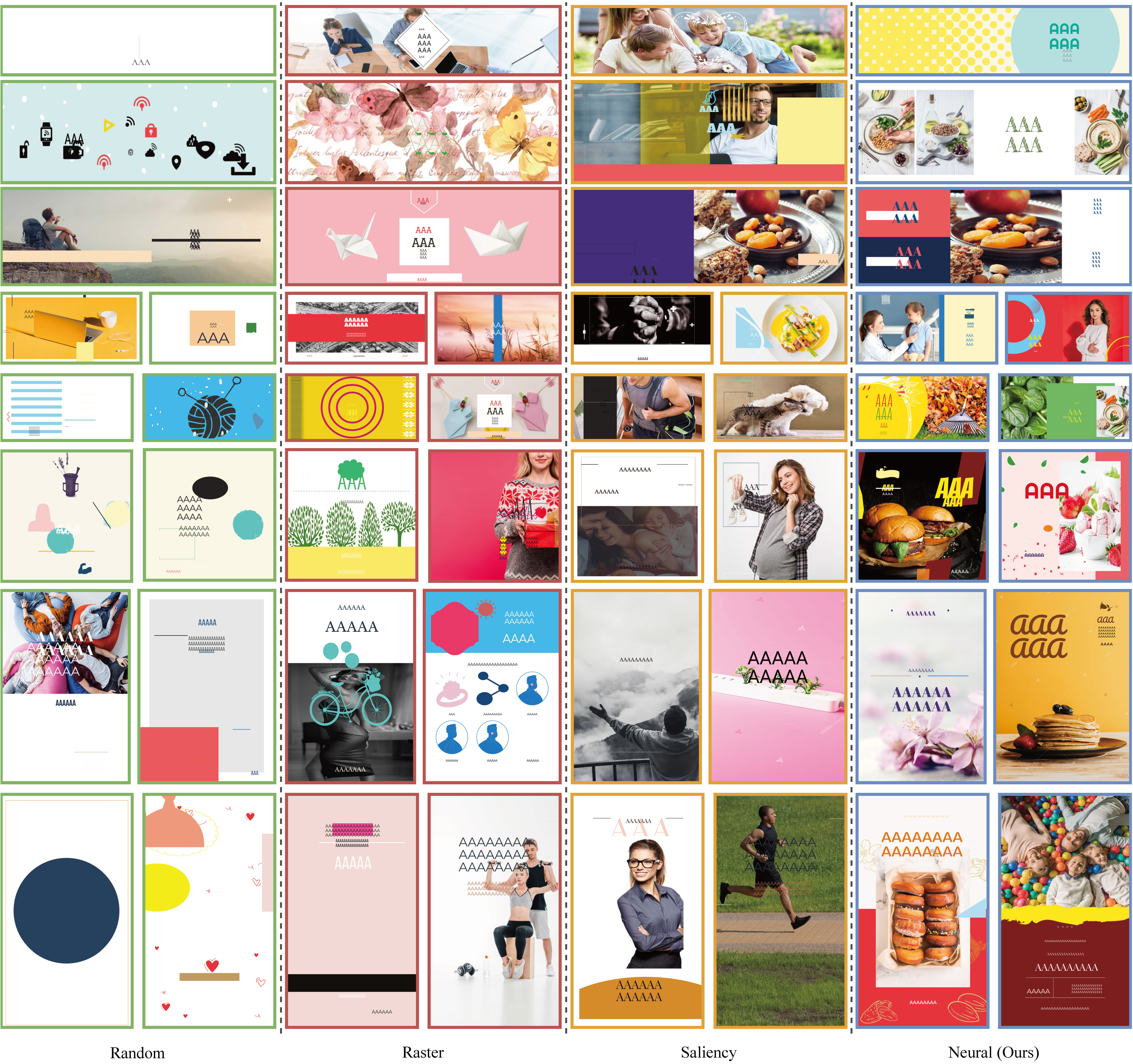

The past few years have witnessed an emergent interest in building generative models for the graphic design domain. For adoption of powerful deep generative models with Transformer-based neural backbones, prior approaches formulate designs as ordered sequences of elements, and simply order the elements in a random or raster manner. We argue that such naive ordering methods are sub-optimal and there is room for improving sample quality through a better choice of order between graphic design elements.

In this paper, we seek to explore the space of orderings to find the ordering strategy that optimizes the performance of graphic design generation models. For this, we propose a model, namely Generative Order Learner (GOL), which trains an autoregressive generator on design sequences, jointly with an ordering network that sort design elements to maximize the generation quality.

With unsupervised training on vector graphic design data, our model is capable of learning a content-adaptive ordering approach, called neural order. Our experiments show that the generator trained with our neural order converges faster, achieving remarkably improved generation quality compared with using alternative ordering baselines. We conduct comprehensive analysis of our learned order to have a deeper understanding of its ordering behaviors. In addition, our learned order can generalize well to diffusion-based generative models and help design generators scale up excellently.

Given a training dataset of vector graphic designs (or rasterized graphic designs with element-level annotations), we aim to order the elements within each design so that the performance of a design generator, trained on the reordered design data, can be maximized. Instead of enforcing any heuristic rules as in existing works, we seek to learn an ordering approach from graphic design data, without any human annotations on element ordering.

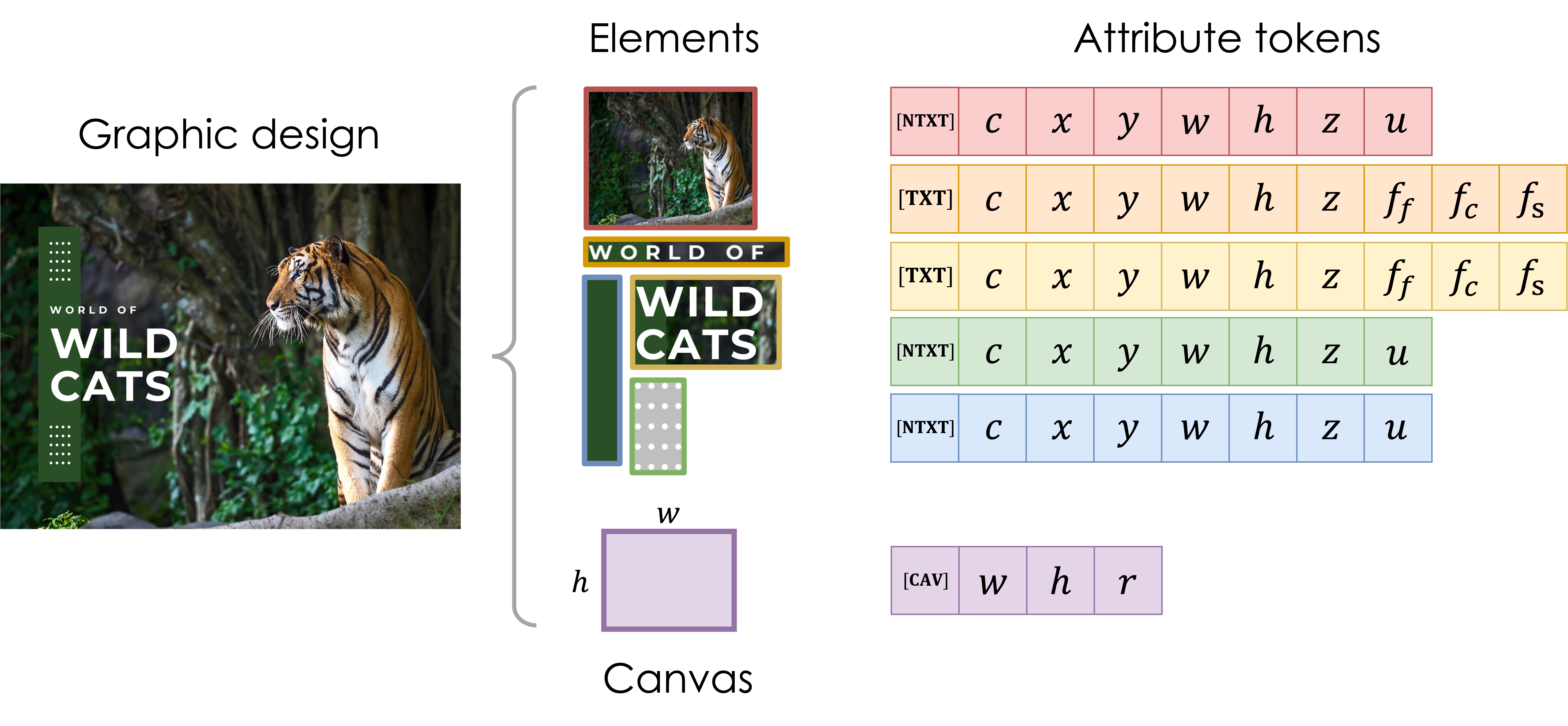

Design representation. A graphic design is decomposed into a canvas and a set of basic element. Each element is represented as a subsequence of discrete attribute tokens, starting with either [TXT] (text) or [NTXT] (non-text) to indicate its general category. The canvas is also converted into a discrete subsequence with [CAV] at the beginning. All the subsequences are concatenated to produce a sequential representation of the design.

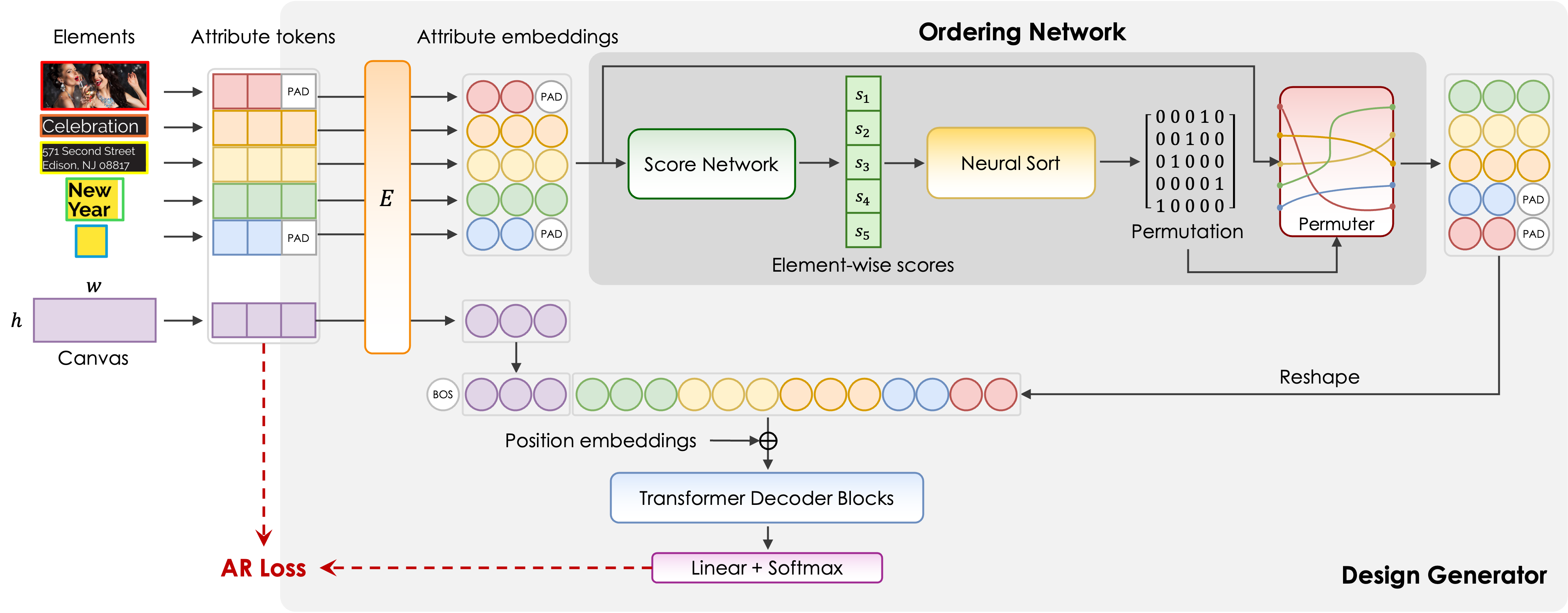

Our framework consists of a design generator along with an ordering network inside it. With designs being formatted as sequences of discrete tokens, the design generator learns a generative model of design sequences based on a Transformer backbone that captures contextual relationships between design tokens. The ordering network learns to permute the elements in a design according to their geometric and visual properties, for training the design generator. The weights of both design generator and ordering network are updated simultaneously in training for mutual improvements—a stronger design generator is able to provide more useful supervisory signal for the ordering network while a better ordering network can contribute the performance improvement of the design generator.

Qualitative comparison of samples generated from models trained with different orders. Training with our neural order yields noticeable improvements in generation quality.

@article{yang2025order,

author = {Yang, Bo and Cao, Ying},

title = {Order Matters: Learning Element Ordering for Graphic Design Generation},

year = {2025},

issue_date = {August 2025},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {44},

number = {4},

issn = {0730-0301},

url = {https://doi.org/10.1145/3730858},

doi = {10.1145/3730858},

journal = {ACM Trans. Graph.},

articleno = {34},

numpages = {16}

}